This is the fifth post in a series on working with Healthcare Cost and Utilization Project (HCUP) datasets using Python and other open-source alternatives to SAS and SPSS, which are the two primary data tools supported by HCUP.

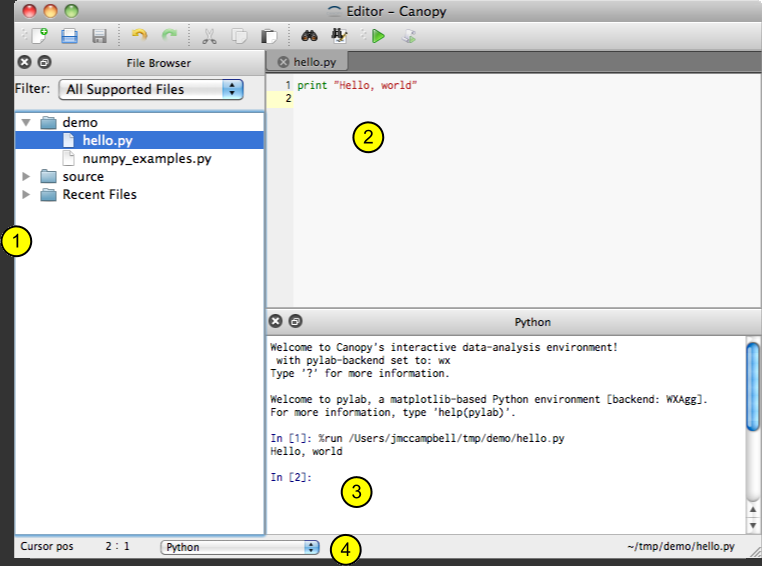

Please note! These instructions build off previous posts. In particular, they assume you are configured with Canopy and PyHCUP, have some basic facility with Python/IPython, and have already read in a small HCUP dataset.

IPython Notebook from this Tutorial

You can download an IPython notebook with code examples from this post.

Now that you have some HCUP data read in, you'll want to do a little housekeeping to get it ready for meaningful analysis. This post will cover two data wrangling tasks you're probably all-too-familiar with: identifying missing values and characterizing/verifying the contents of the data set.

HCUP Sentinel Values

Files from HCUP have several different sentinel values used to mark missing, invalid, or otherwise unavailable data. For a full breakdown, see HCUP's documentation on missing values in http://www.hcup-us.ahrq.gov/db/coding.pdf. In most of my work, the details of this are not vital I just need to mark them as null in the data set.

Replacing Sentinel Values

PyHCUP has a built-in function in its parser module to sweep through an imported HCUP data set and mark all the sentinels as missing values. To use it, pass your imported data set to the parser.replace_df_sentinels() function. This may take some time, depending on the size of the data set you've imported and the speed of your computer.

Check the AGE column (or whichever column you used .describe() with previously) in the replaced and non-replaced data to see the effects.

Notice that, in my data set, the count for this column has dropped by one. When using .describe() on a column, the count reflects the number of non-null values. When I used parser.df_replace_sentinels(), it found and replaced only one sentinel value in the AGE column. Note as well that the minimum value in this column has changed from -88 to 0, which is still young but no longer requires time travel.

Characterizing Numeric Data

Although we are only looked at one column above for brevity, the parser.df_replace_sentinels() function has been applied to every column in the data set. You check describe additional columns by substituting in the column name for AGE in the code we've been using so far. Here is another, looking at the total charges per inpatient stay.

So out of these 10,000 stays, somebody got out for cheap at less than $1,000 and somebody had a rough time with a bill greater than $1.1 million.

It is possible to describe multiple columns side by side by first preparing a list of columns, then referring to that instead of passing a single column name. In Python, you can make a list by putting all the desired list items in a shared set of square brackets, with commas between the items. For example, we could get ages, total charges, and length of stay all at once with something like this.

| AGE | TOTCHG | LOS | |

|---|---|---|---|

| count | 9999.000000 | 10000.00000 | 10000.000000 |

| mean | 43.066307 | 32321.22250 | 6.110600 |

| std | 25.825253 | 50510.43668 | 10.319303 |

| min | 0.000000 | 990.00000 | 0.000000 |

| 25% | 24.000000 | 9669.00000 | 2.000000 |

| 50% | 46.000000 | 18253.50000 | 3.000000 |

| 75% | 63.000000 | 35106.25000 | 6.000000 |

| max | 104.000000 | 1162225.00000 | 271.000000 |

Characterizing Non-Numeric Data

Averages, extreme values, and percentiles are less helpful for describing things like discharge destinations and diagnoses. For non-numeric data, pandas has a built-in .value_counts() method you can use on columns similar to .describe(). As an example, HCUP SID data typically have available a FEMALE column, where a value of 1 indicates the patient was female and 0 indicates the patient was male.

Unlike .describe(), you cannot directly use .value_counts() simultaneously with multiple columns. However, you can use Python to put together a loop that generates value counts for columns in a list. You can also "nest" this kind of count by grouping, such as getting value counts of primary diagnosis by race or discharge destination by homeless status. We'll cover both of these techniques in future posts.

Knowing What Columns Are Available

HCUP provide documentation with detailed descriptions on which columns are available for which states in which year, as well as what the contents of each column mean. But for day-to-day work, we can get a list of all the columns in a DataFrame by invoking DataFrame.columns on any of our data sets. The results should be the same right now whether you use it on df or nulls_replaced, since one is derived from the other.